Preface

I like the language JS very much. I feel that it is the same as the C language. In the C language, many things need to be implemented by ourselves, so that we can exert unlimited creativity and imagination. In JS, although many things have been provided in V8, but with JS, you can still create a lot of interesting things, as well as interesting writing methods.

In addition, JS should be the only language I have seen that does not implement network and file functions. Network and files are a very important ability, and for programmers, they are also very core and basic knowledge. Fortunately, Node.js was created. On the basis of JS, Node.js uses the capabilities provided by V8 and Libuv, which greatly expands and enriches the capabilities of JS, especially the network and files, so that I can not only use JS, you can also use functions such as network and files, which is one of the reasons why I gradually turned to Node.js, and one of the reasons why I started to study the source code of Node.js.

Although Node.js satisfies my preferences and technical needs, at the beginning, I did not devote myself to code research, but occasionally looked at the implementation of some modules. The real beginning was to do "Node.js". .js is how to use Libuv to implement event loop and asynchrony”. Since then, most of my spare time and energy have been devoted to source code research.

I started with Libuv first, because Libuv is one of the core of Node.js. Since I have studied some Linux source code, and I have been learning some principles and implementations of the operating system, I did not encounter much difficulty when reading Libuv. The use and principles of C language functions can basically be understood. , the point is that each logic needs to be clearly defined.

The methods I use are annotations and drawings, and I personally prefer to write annotations. Although code is the best comment, I am willing to take the time to explain the background and meaning of the code with comments, and comments will make most people understand the meaning of the code faster. When I read Libuv, I also read some JS and C++ code interspersed.

The way I read Node.js source code is, pick a module and analyze it vertically from the JS layer to the C++ layer, then to the Libuv layer.

After reading Libuv, the next step is to read the code of the JS layer. Although JS is easy to understand, there is a lot of code in the JS layer, and I feel that the logic is very confusing, so so far, I still have not read it carefully. follow-up plans.

In Node.js, C++ is the glue layer. In many cases, C++ is not used, and it does not affect the reading of Node.js source code, because C++ is often only a function of transparent transmission. It sends the request of the JS layer through V8, passed to Libuv, and then in reverse, so I read the C++ layer at the end.

I think the C++ layer is the most difficult. At this time, I have to start reading the source code of V8 again. It is very difficult to understand V8. I chose almost the earliest version 0.1.5, and then combined with the 8.x version. Through the early version, first learn the general principles of V8 and some early implementation details. Because the subsequent versions have changed a lot, but more of them are enhancements and optimizations of functions, and many core concepts have not changed. Lost direction and lost momentum.

However, even in the early version, many contents are still very complicated. The reason for combining the new version is because some functions were not implemented in the previous version. To understand its principle at this time, you can only look at the code of the new version and have the experience of the early version, reading the new version of the code also has certain benefits, and also know some reading skills to some extent.

Most of the code in Node.js is in the C++ and JS layers, so I am still reading the code of these two layers constantly. Or according to the vertical analysis of modules. Reading Node.js code gave me a better understanding of the principles of Node.js and a better understanding of JS. However, the amount of code is very large and requires a steady stream of time and energy investment. But when it comes to technology, it’s a wonderful feeling to know what it is, and it’s not a good feeling that you make a living off a technology but don’t know much about it.

Although reading the source code will not bring you immediate and rapid benefits, there are several benefits that are inevitable. The first is that it will determine your height, and the second is that when you write the code, youInstead of seeing some cold, lifeless characters. This may be a bit exaggerated, but you understand the principles of technology, and when you use technology, you will indeed have different experiences, and your thinking will also have more changes.

The third is to improve your learning ability. When you have more understanding and understanding of the underlying principles, you will learn more quickly when you are learning other technologies. For example, if you understand the principle of epoll, then you can see When Nginx, Redis, Libuv and other source code are used, the event-driven logic can basically be understood very quickly.

I am very happy to have these experiences, and I have invested a lot of time and energy. I hope to have more understanding and understanding of Node.js in the future, and I hope to have more practice in the direction of Node.js.

The purpose of this book

The original intention of reading the Node.js source code is to give yourself a deep understanding of the principles of Node.js, but I found that many students are also very interested in the principles of Node.js, because they have been writing about the principles of Node.js in their spare time.

Node.js source code analysis articles (based on Node.js V10 and V14), so I plan to organize these contents into a systematic book, so that interested students can systematically understand and understand the principles of Node.js. However, I hope that readers will not only learn the knowledge of Node.js from the book, but also learn how to read the source code of Node.js, and can complete the research of the source code independently. I also hope that more students will share their experiences. This book is not the whole of Node.js, but try to talk about it as much as possible.

The source code is very many, intricate, and there may be inaccuracies in understanding. Welcome to exchange. Because I have seen some implementations of early Linux kernels (0.11 and 1.2.13) and early V8 (0.1.5), the article will cite some of the codes in order to let readers know more about the general implementation principle of a knowledge point. If you are interested, you can read the relevant code by yourself.

The structure of the book

This book is divided into twenty-two chapters, and the code explained is based on the Linux system.

-

Mainly introduces the composition and overall working principle of Node.js, and analyzes the process of starting Node.js. Finally, it introduces the evolution of the server architecture and the selected architecture of Node.js.

-

It mainly introduces the basic data structure and general logic in Node.js, which will be used in later chapters.

-

Mainly introduces the event loop of Libuv, which is the core of Node.js. This chapter specifically introduces the implementation of each stage in the event loop.

-

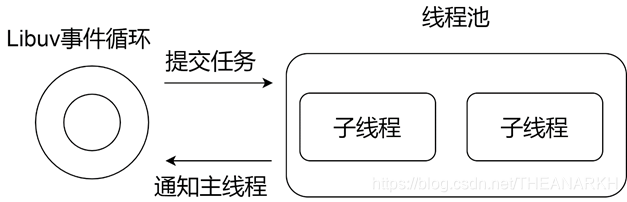

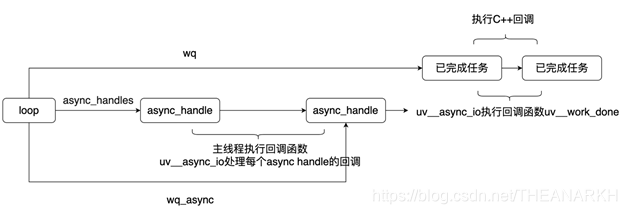

Mainly analyze the implementation of thread pool in Libuv. Libuv thread pool is very important to Node.js. Many modules in Node.js need to use thread pool, including crypto, fs, dns, etc. Without a thread pool, the functionality of Node.js would be greatly reduced. At the same time, the communication mechanism between Libuv neutron thread and main thread is analyzed. It is also suitable for other child threads to communicate with the main thread.

-

Mainly analyze the implementation of flow in Libuv. Flow is used in many places in Node.js source code, which can be said to be a very core concept.

-

Mainly analyze some important modules and general logic of C++ layer in Node.js.

-

Mainly analyze the signal processing mechanism of Node.js. Signal is another way of inter-process communication.

-

Mainly analyze the implementation of the dns module of Node.js, including the use and principle of cares.

-

It mainly analyzes the implementation and use of the pipe module (Unix domain) in Node.js. The Unix domain is a way to realize inter-process communication, which solves the problem that processes without inheritance cannot communicate. And support for passing file descriptors greatly enhances the capabilities of Node.js.

-

Mainly analyze the implementation of timer module in Node.js. A timer is a powerful tool for timing tasks.

-

Mainly analyze the implementation of Node.js setImmediate and nextTick.

-

Mainly introduces the implementation of file modules in Node.js. File operations are functions that we often use.

-

Mainly introduces the implementation of the process module in Node.js. Multi-process enables Node.js to take advantage of multi-core capabilities.

-

Mainly introduces the implementation of thread module in Node.js. Multi-process and multi-thread have similar functions but there are some differences.

-

Mainly introduces the use and implementation principle of the cluster module in Node.js. The cluster module encapsulates the multi-process capability, making Node.j a server architecture that can use multi-process and utilize the multi-core capability.

-

Mainly analyze the implementation and related content of UDP in Node.js.

-

Mainly analyze the implementation of TCP module in Node.js. TCP is the core module of Node.js. Our commonly used HTTP and HTTPS are based on net module.

-

It mainly introduces the implementation of the HTTP module and some principles of the HTTP protocol.

-

Mainly analyze the principle of loading various modules in Node.js, and deeply understand what the require function of Node.js does.

-

Mainly introduce some methods to expand Node.js, use Node.js to expand Node.js.

-

It mainly introduces the implementation of JS layer Stream. The logic of the Stream module is very complicated, so I briefly explained it.

-

Mainly introduces the implementation of the event module in Node.js. Although the event module is simple, it is the core module of Node.js.

Readers This book is aimed at students who have some experience in using Node.js and are interested in the principles of Node.js, because this book analyzes the principles of Node.js from the perspective of Node.js source code, some of which are C, C++, so the reader needs to have a certain C and C++ foundation. In addition, it will be better to have a certain operating system, computer network, and V8 foundation.

Reading Suggestions

It is recommended to read the first few basic and general contents, then read the implementation of a single module, and finally, if you are interested, read the chapter on how to expand Node.js. If you are already familiar with Node.js and are just interested in a certain module or content, you can go directly to a certain chapter. When I first started to read the Node.js source code, I chose the V10.x version. Later, Node.js has been updated to V14, so some of the codes in the book are V10 and V14. Libuv is V1.23. You can get it on my github.

Source code reading

It is recommended that the source code of Node.js consists of JS, C++, and C.

-

Libuv is written in C language. In addition to understanding C syntax, understanding Libuv is more about the understanding of operating systems and networks. Some classic books can be referred to, such as "Unix Network Programming" 1 and 2 two volumes, "Linux System Programming Manual" upper and lower volumes, The Definitive Guide to TCP/IP, etc. There are also Linux API documentation and excellent articles on the Internet for reference.

-

C++ mainly uses the capabilities provided by V8 to expand JS, and some functions are implemented in C++. In general, C++ is more of a glue layer, using V8 as a bridge to connect Libuv and JS. I don't know C++, and it doesn't completely affect the reading of the source code, but it will be better to know C++. To read the C++ layer code, in addition to the syntax, you also need to have a certain understanding and understanding of the concept and use of V8.

-

JS code I believe that students who learn Node.js have no problem.

Node.js Composition and Principle

1.1 Introduction to Node.js

Node.js is an event-driven single-process single-threaded application. Single threading is embodied in Node.js maintaining a series of tasks in a single thread, and then In the event loop, the nodes in the task queue are continuously consumed, and new tasks are continuously generated, which continuously drives the execution of Node.js in the generation and consumption of tasks. From another point of view, Node.js can be said to be multi-threaded, because the bottom layer of Node.js also maintains a thread pool, which is mainly used to process some tasks such as file IO, DNS, and CPU computing.

Node.js is mainly composed of V8, Libuv, and some other third-party modules (cares asynchronous DNS parsing library, HTTP parser, HTTP2 parser, compression library, encryption and decryption library, etc.). The Node.js source code is divided into three layers, namely JS, C++, and C.

Libuv is written in C language. The C++ layer mainly provides the JS layer with the ability to interact with the bottom layer through V8. The C++ layer also implements some functions. The JS layer It is user-oriented and provides users with an interface to call the underlying layer.

1.1.1 JS Engine V8

Node.js is a JS runtime based on V8. It utilizes the capabilities provided by V8 and greatly expands the capabilities of JS. This extension does not add new language features to JS, but expands functional modules. For example, in the front end, we can use the Date function, but we cannot use the TCP function, because this function is not built-in in JS.

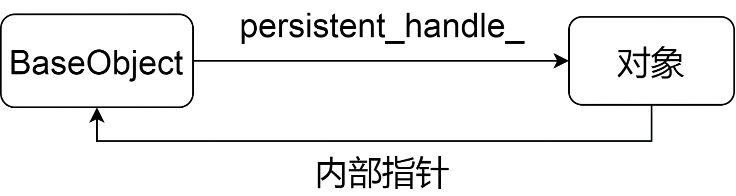

In Node.js, we can use TCP, which is what Node.js does, allowing users to use functions that do not exist in JS, such as files, networks. The core parts of Node.js are Libuv and V8. V8 is not only responsible for executing JS, but also supports custom extensions, realizing the ability of JS to call C++ and C++ to call JS.

For example, we can write a C++ module and call it in JS. Node.js makes use of this ability to expand the function. All C and C++ modules called by the JS layer are done through V8.

1.1.2 Libuv

Libuv is the underlying asynchronous IO library of Node.js, but it provides functions not only IO, but also processes, threads, signals, timers, inter-process communication, etc., and Libuv smoothes the differences between various operating systems. The functions provided by Libuv are roughly as follows • Full-featured event loop backed by epoll, kqueue, IOCP, event ports.

- Asynchronous TCP and UDP sockets

- Asynchronous DNS resolution

- Asynchronous file and file system operations

- File system events

- ANSI escape code controlled TTY

- IPC with socket sharing, using Unix domain sockets or named pipes (Windows)

- Child processes

- Thread pool

- Signal handling

- High resolution clock

- Threading and synchronization primitives

The implementation of Libuv is a classic producer-consumer model. In the entire life cycle of Libuv, each round of the cycle will process the task queue maintained by each phase, and then execute the callbacks of the nodes in the task queue one by one. In the callback, new tasks are continuously produced, thereby continuously driving Libuv.

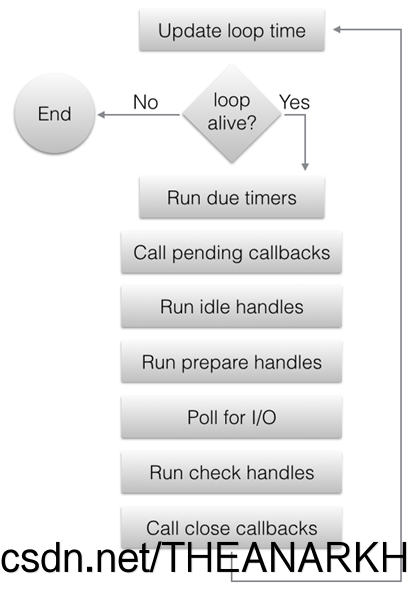

The following is the overall execution process of Libuv

From the above figure, we roughly understand that Libuv is divided into several stages, and then continuously performs the tasks in each stage in a loop. Let's take a look at each stage in detail.

-

Update the current time. At the beginning of each event loop, Libuv will update the current time to the variable. The remaining operations of this cycle can use this variable to obtain the current time to avoid excessive Too many system calls affect performance, the additional effect is that the timing is not so precise. But in a round of event loop, Libuv will actively update this time when necessary. For example, when returning after blocking the timeout time in epoll, it will update the current time variable again.

-

If the event loop is in the alive state, start processing each stage of the event loop, otherwise exit the event loop.

What does alive state mean? If there are handles in the active and ref states, the request in the active state or the handle in the closing state, the event loop is considered to be alive (the details will be discussed later).

-

timer stage: determine which node in the minimum heap has timed out, execute its callback.

-

pending stage: execute pending callback. Generally speaking, all IO callbacks (network, file, DNS) will be executed in the Poll IO stage, but in some cases, the callback of the Poll IO stage will be delayed until the next loop execution, so this callback is executed in the pending stage For example, if there is an error in the IO callback or the writing of data is successful, the callback will be executed in the pending phase of the next event loop.

-

Idle stage: The event loop will be executed every time (idle does not mean that the event loop will be executed when it is idle).

-

The prepare phase: similar to the idle phase.

-

Poll IO stage: call the IO multiplexing interface provided by each platform (for example, epoll mode under Linux), wait for the timeout time at most, and execute the corresponding callback when returning.

The calculation rule of timeout:

- timeout is 0 if the time loop is running in UV_RUN_NOWAIT mode.

- If the time loop is about to exit (uv_stop was called), timeout is 0.

- If there is no active handle or request, the timeout is 0.

- If there are nodes in the queue with the idle phase, the timeout is 0.

- If there is a handle waiting to be closed (that is, uv_close is adjusted), the timeout is 0.

- If none of the above is satisfied, take the node with the fastest timeout in the timer phase as timeout.

- If none of the above is satisfied, the timeout is equal to -1, that is, it will block until the condition is satisfied.

- Check stage: same as idle and prepare. 9. Closing phase: Execute the callback passed in when calling the uv_close function. 10. If

Libuv is running in UV_RUN_ONCE mode, the event loop is about to exit. But there is a situation that the timeout value of the Poll IO stage is the value of the node in the timer stage, and the Poll IO stage is returned because of the timeout, that is, no event occurs, and no IO callback is executed.

At this time, it needs to be executed once timer stage. Because a node timed out. 11. One round of event loop ends. If Libuv runs in UV_RUN_NOWAIT or UV_RUN_ONCE mode, it exits the event loop. If it runs in UV_RUN_DEFAULT mode and the status is alive, the next round of loop starts. Otherwise exit the event loop.

Below I can understand the basic principle of libuv through an example.

#include

#include

int64_t counter = 0;

void wait_for_a_while(uv_idle_t* handle) {

counter++;

if (counter >= 10e6)

uv_idle_stop(handle);

}

int main() {

uv_idle_t idler;

// Get the core structure of the event loop. and initialize an idle

uv_idle_init(uv_default_loop(), &idler);

// Insert a task into the idle phase of the event loop uv_idle_start(&idler, wait_for_a_while);

// Start the event loop uv_run(uv_default_loop(), UV_RUN_DEFAULT);

// Destroy libuv related data uv_loop_close(uv_default_loop());

return 0;

}

To use Libuv, we first need to obtain uv_loop_t, the core structure of Libuv. uv_loop_t is a very large structure, which records the data of the entire life cycle of Libuv. uv_default_loop provides us with a uv_loop_t structure that has been initialized by default. Of course, we can also allocate one and initialize it ourselves.

uv_loop_t* uv_default_loop(void) {

// cache if (default_loop_ptr != NULL)

return default_loop_ptr;

if (uv_loop_init(&default_loop_struct))

return NULL;

default_loop_ptr = &default_loop_struct;

return default_loop_ptr;

}

Libuv maintains a global uv*loop_t structure, which is initialized with uv_loop_init, and does not intend to explain the uv_loop_init function, because it probably initializes each field of the uv_loop_t structure. Then we look at the functions of the uv_idle* series.

1 uv_idle_init

int uv_idle_init(uv_loop_t* loop, uv_idle_t* handle) {

/*

Initialize the type of handle, belong to the loop, mark UV_HANDLE_REF,

And insert handle into the end of the loop->handle_queue queue */

uv__handle_init(loop, (uv_handle_t*)handle, UV_IDLE);

handle->idle_cb = NULL;

return 0;

}

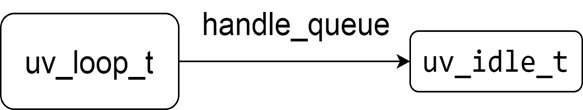

After executing the uv_idle_init function, the memory view of Libuv is shown in the following figure

2 uv_idle_start

int uv_idle_start(uv_idle_t* handle, uv_idle_cb cb) {

// If the start function has been executed, return directly if (uv__is_active(handle)) return 0;

// Insert the handle into the idle queue in the loop QUEUE_INSERT_HEAD(&handle->loop->idle_handles, &handle->queue);

// Mount the callback, which will be executed in the next cycle handle->idle_cb = cb;

/*

Set the UV_HANDLE_ACTIVE flag bit, and increase the number of handles in the loop by one,

When init, just mount the handle to the loop, when start, the handle is in the active state*/

uv__handle_start(handle);

return 0;

}

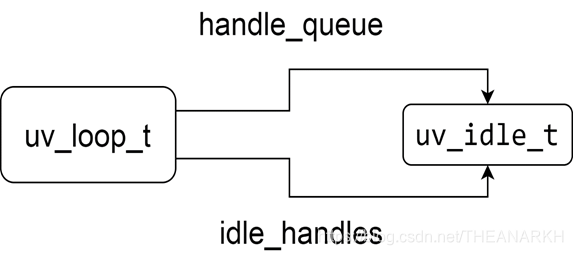

The memory view after executing uv_idle_start is shown in the following figure.

Then execute uv_run to enter Libuv's event loop.

int uv_run(uv_loop_t* loop, uv_run_mode mode) {

int timeout;

int r;

int ran_pending;

// Submit the task to loop before uv_run

r = uv__loop_alive(loop);

// no tasks to process or uv_stop called

while (r != 0 && loop->stop_flag == 0) {

// Process the idle queue uv__run_idle(loop);

}

// exited because uv_stop was called, reset the flag

if (loop->stop_flag != 0)

loop->stop_flag = 0;

/*

Returns whether there are still active tasks (handle or request),

The agent can execute uv_run again

*/

return r;

When the handle queue, Libuv will exit. Later, we will analyze the principle of Libuv event loop in detail.

1.1.3 Other third-party libraries

The third-party libraries in Node.js include asynchronous DNS parsing (cares), HTTP parser (the old version uses http_parser, the new version uses llhttp), HTTP2 parser (nghttp2), decompression and compression library (zlib ), encryption and decryption library (openssl), etc., not introduced one by one.

1.2 How Node.js works

1.2.1 How does Node.js extend JS functionality?

V8 provides a mechanism that allows us to call the functions provided by C++ and C language modules at the JS layer. It is through this mechanism that Node.js realizes the expansion of JS capabilities. Node.js does a lot of things at the bottom layer, implements many functions, and then exposes the interface to users at the JS layer, which reduces user costs and improves development efficiency.

1.2.2 How to add a custom function in V8?

// Define Handle in C++ Test = FunctionTemplate::New(cb);

global->Set(String::New("Test"), Test);

// use const test = new Test() in JS;

We first have a perceptual understanding. In the following chapters, we will specifically explain how to use V8 to expand the functions of JS.

1.2.3 How does Node.js achieve expansion?

Node.js does not expand an object for each function, and then mounts it in a global variable, but expands a process object, and then expands the js function through process.binding. Node.js defines a global JS object process, which maps to a C++ object process, and maintains a linked list of C++ modules at the bottom layer. JS accesses the C++ process object by calling the process.binding of the JS layer, thereby accessing the C++ module ( Similar to accessing JS's Object, Date, etc.). However, Node.js version 14 has been changed to the internalBinding method, and the C++ module can be accessed through the internalBinding. The principle is similar.

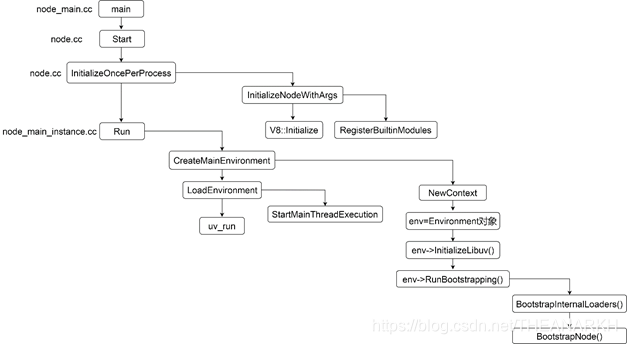

1.3 Node.js startup process

The following is the main flowchart of Node.js startup, as shown in Figure 1-4.

Let's go from top to bottom and look at what each process does.

1.3.1 Registering C++ modules

The function of the RegisterBuiltinModules function (node_binding.cc) is to register C++ modules.

void RegisterBuiltinModules() {

#define V(modname) _register_##modname();

NODE_BUILTIN_MODULES(V)

#undef V

}

NODE_BUILTIN_MODULES is a C language macro, which is expanded as follows (omitting similar logic)

void RegisterBuiltinModules() {

#define V(modname) _register_##modname();

V(tcp_wrap)

V(timers)

...other modules #undef V

}

Further expand as follows

void RegisterBuiltinModules() {

_register_tcp_wrap();

_register_timers();

}

A series of functions starting with _register are executed, but we cannot find these functions in the Node.js source code, because these functions are defined by macros in the file defined by each C++ module (the last line of the .cc file). Take the tcp_wrap module as an example to see how it does it. The last sentence of the code in the file tcp_wrap.cc NODE_MODULE_CONTEXT_AWARE_INTERNAL(tcp_wrap, node::TCPWrap::Initialize) macro expansion is

#define NODE_MODULE_CONTEXT_AWARE_INTERNAL(modname, regfunc)

NODE_MODULE_CONTEXT_AWARE_CPP(modname,

regfunc,

nullptr,

NM_F_INTERNAL)

Continue to expand

#define NODE_MODULE_CONTEXT_AWARE_CPP(modname, regfunc, priv, flags

static node::node_module _module = {

NODE_MODULE_VERSION,

flags,

nullptr,

**FILE**,

nullptr,

(node::addon_context_register_func)(regfunc),

NODE_STRINGIFY(modname),

priv,

nullptr};

void _register_tcp_wrap() { node_module_register(&_module); }

We see that the bottom layer of each C++ module defines a function starting with _register. When Node.js starts, these functions will be executed one by one. Let's continue to look at what these functions do, but before that, let's take a look at the data structures that represent C++ modules in Node.js.

struct node_module {

int nm_version;

unsigned int nm_flags;

void* nm_dso_handle;

const char* nm_filename;

node::addon_register_func nm_register_func;

node::addon_context_register_func nm_context_register_func;

const char* nm_modname;

void* nm_priv;

struct node_module* nm_link;

};

We see that the function at the beginning of _register calls node_module_register and passes in a node_module data structure, so let's take a look at the implementation of node_module_register

void node_module_register(void* m) {

struct node_module* mp = reinterpret_cast (m);

if (mp->nm_flags & NM_F_INTERNAL) {

mp->nm_link = modlist_internal;

modlist_internal = mp;

} else if (!node_is_initialized) {

mp->nm_flags = NM_F_LINKED;

mp->nm_link = modlist_linked;

modlist_linked = mp;

} else {

thread_local_modpending = mp;

}

}

We only look at AssignToContext and CreateProperties, set_env_vars will explain the process chapter.

1.1 AssignToContext

inline void Environment::AssignToContext(v8::Local context,

const ContextInfo& info) {

// Save the env object in the context context->SetAlignedPointerInEmbedderData(ContextEmbedderIndex::kEnvironment, this);

// Used by Environment::GetCurrent to know that we are on a node context.

context->SetAlignedPointerInEmbedderData(ContextEmbedderIndex::kContextTag, Environment::kNodeContextTagPtr);

}

AssignToContext is used to save the relationship between context and env. This logic is very important, because when the code is executed later, we will enter the field of V8. At this time, we only know Isolate and context. If we don't save the relationship between context and env, we don't know which env we currently belong to. Let's see how to get the corresponding env.

inline Environment* Environment::GetCurrent(v8::Isolate* isolate) {

v8::HandleScope handle_scope(isolate);

return GetCurrent(isolate->GetCurrentContext());

}

inline Environment* Environment::GetCurrent(v8::Local context) {

return static_cast (

context->GetAlignedPointerFromEmbedderData(ContextEmbedderIndex::kEnvironment));

}

1.2 CreateProperties

Next, let's take a look at the logic of creating a process object in CreateProperties.

Isolate* isolate = env->isolate();

EscapableHandleScope scope(isolate);

Local context = env->context();

// Apply for a function template Local process_template = FunctionTemplate::New(isolate);

process_template->SetClassName(env->process_string());

// Save the function Local generated by the function template process_ctor;

// Save the object Local created by the function generated by the function module process;

if (!process_template->GetFunction(context).ToLocal(&process_ctor)|| !process_ctor->NewInstance(context).ToLocal(&process)) {

return MaybeLocal ();

}

The object saved by the process is the process object we use in the JS layer. When Node.js is initialized, some properties are also mounted.

READONLY_PROPERTY(process,

"version",

FIXED_ONE_BYTE_STRING(env->isolate(),

NODE_VERSION));

READONLY_STRING_PROPERTY(process, "arch", per_process::metadata.arch);......

After creating the process object, Node.js saves the process to env.

Local process_object = node::CreateProcessObject(this).FromMaybe(Local ());

set_process_object(process_object)

1.3.3 Initialize Libuv task

The logic in the InitializeLibuv function is to submit a task to Libuv.

void Environment::InitializeLibuv(bool start*profiler_idle_notifier) {

HandleScope handle_scope(isolate());

Context::Scope context_scope(context());

CHECK_EQ(0, uv_timer_init(event_loop(), timer_handle()));

uv_unref(reinterpret_cast (timer_handle()));

uv_check_init(event_loop(), immediate_check_handle());

uv_unref(reinterpret_cast (immediate_check_handle()));

uv_idle_init(event_loop(), immediate_idle_handle());

uv_check_start(immediate_check_handle(), CheckImmediate);

uv_prepare_init(event_loop(), &idle_prepare_handle*);

uv*check_init(event_loop(), &idle_check_handle*);

uv*async_init(

event_loop(),

&task_queues_async*,

[](uv*async_t* async) {

Environment* env = ContainerOf(

&Environment::task_queues_async*, async);

env->CleanupFinalizationGroups();

env->RunAndClearNativeImmediates();

});

uv*unref(reinterpret_cast (&idle_prepare_handle*));

uv*unref(reinterpret_cast (&idle_check_handle*));

uv*unref(reinterpret_cast (&task_queues_async*));

// …

}

These functions are all provided by Libuv, which are to insert task nodes into different stages of Libuv, and uv_unref is to modify the state.

1 timer_handle is the data structure that implements the timer in Node.js, corresponding to the time phase of Libuv 2 immediate_check_handle is the data structure that implements setImmediate in Node.js, corresponding to the check phase of Libuv.

3 task queues_async is used for sub-thread and main thread communication.

1.3.4 Initialize Loader and Execution Contexts

s.binding = function binding(module) {

module = String(module);

if (internalBindingWhitelist.has(module)) {

return internalBinding(module);

}

throw new Error(`No such module: ${module}`);

};

Mount the binding function in the process object (that is, the process object we usually use). This function is mainly used for built-in JS modules, which we will often see later. The logic of binding is to find the corresponding C++ module according to the module name. The above processing is for Node.js to load C++ modules through the binding function at the JS layer. We know that there are native JS modules (JS files in the lib folder) in Node.js. Next, let's take a look at the processing of loading native JS modules. Node.js defines a NativeModule class that is responsible for loading native JS modules. A variable is also defined to hold the list of names of native JS modules.

static map = new Map(moduleIds.map((id) => [id, new NativeModule(id)]));

The main logic of NativeModule is as follows: 1. The code of the native JS module is converted into characters and stored in the node_javascript.cc file. The NativeModule is responsible for the loading of the native JS module, that is, compilation and execution. 2 Provide a require function to load native JS modules. For modules whose file paths start with internal, they cannot be required by users.

This is the general logic of native JS module loading. Specifically, we will analyze it in the Node.js module loading chapter. After executing internal/bootstrap/loaders.js, finally return three variables to the C++ layer.

return {

internalBinding,

NativeModule,

require: nativeModuleRequire,

};

The C++ layer saves two of the functions, which are used to load the built-in C++ module and the function of the native JS module respectively.

set_internal_binding_loader(internal_binding_loader.As ());

set_native_module_require(require.As ());

So far, internal/bootstrap/loaders.js is analyzed.

2 Initialize the execution context

BootstrapNode is responsible for initializing the execution context, the code is as follows

EscapableHandleScope scope(isolate*);

// Get global variables and set the global property Local global = context()->Global();

global->Set(context(), FIXED_ONE_BYTE_STRING(isolate*, "global"), global).Check();

Parameters process, require, internalBinding, primordials when executing internal/bootstrap/node.js

std::vector > node_params = {

process_string(),

require_string(),

internal_binding_string(),

primordials_string()};

std::vector > node_args = {

process_object(),

// native module loader native_module_require(),

// C++ module loader internal_binding_loader(),

primordials()};

MaybeLocal result = ExecuteBootstrapper(

this, "internal/bootstrap/node", &node_params, &node_args);

Set a global property on the global object, which is the global object we use in Node.js. Then execute internal/bootstrap/node.js to set some variables (refer to internal/bootstrap/node.js for details).

process.cpuUsage = wrapped.cpuUsage;

process.resourceUsage = wrapped.resourceUsage;

process.memoryUsage = wrapped.memoryUsage;

process.kill = wrapped.kill;

process.exit = wrapped.exit;

set global variable

defineOperation(global, 'clearInterval', timers.clearInterval);

defineOperation(global, 'clearTimeout', timers.clearTimeout);

defineOperation(global, 'setInterval', timers.setInterval);

defineOperation(global, 'setTimeout', timers.setTimeout);

ObjectDefineProperty(global, 'process', {

value: process,

enumerable: false,

writable: true,

configurable: true

});

1.3.5 Execute the user JS file

StartMainThreadExecution to perform some initialization work, and then execute the user JS code.

- Mount attributes to the process object

Execute the patchProcessObject function (exported in node_process_methods.cc) to mount some attributes to the process object, not listed one by one.

// process.argv

process->Set(context,

FIXED_ONE_BYTE_STRING(isolate, "argv"),

ToV8Value(context, env->argv()).ToLocalChecked()).Check();

READONLY_PROPERTY(process,

"pid",

Integer::New(isolate, uv_os_getpid()));

Because Node.js adds support for threads, some properties need to be hacked. For example, when process.exit is used in a thread, a single thread is exited instead of the entire process, and functions such as exit need special handling. Later chapters will explain in detail.

- Handling inter-process communication

function setupChildProcessIpcChannel() {

if (process.env.NODE_CHANNEL_FD) {

const fd = parseInt(process.env.NODE_CHANNEL_FD, 10);

delete process.env.NODE_CHANNEL_FD;

const serializationMode =

process.env.NODE_CHANNEL_SERIALIZATION_MODE || "json";

delete process.env.NODE_CHANNEL_SERIALIZATION_MODE;

require("child_process")._forkChild(fd, serializationMode);

}

}

The environment variable NODE_CHANNEL_FD is set when the child process is created. If it is indicated that the currently started process is a child process, inter-process communication needs to be handled.

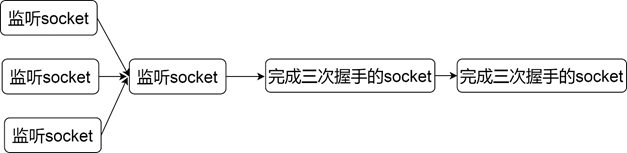

- I will think deeply or look at its implementation. As we all know, when a server starts, it will listen to a port, which is actually a new socket. Then if a connection arrives, we can get the socket corresponding to the new connection through accept.

Is this socket and the listening socket the same? In fact, sockets are divided into listening type and communication type. On the surface, the server uses one port to realize multiple connections, but this port is used for monitoring, and the bottom layer is used for communication with the client is actually another socket. So every time a connection comes over, the socket responsible for monitoring finds that it is a packet (syn packet) that establishes a connection, and it will generate a new socket to communicate with (the one returned when accepting).

The listening socket only saves the IP and port it is listening on. The communication socket first copies the IP and port from the listening socket, and then records the client's IP and port. When a packet is received next time, the operating system will Find the socket from the socket pool according to the quadruple to complete the data processing.

The serial mode is to pick a node from the queue that has completed the three-way handshake, and then process it. Pick another node and process it again. If there is blocking IO in the process of processing, you can imagine how low the efficiency is. And when the amount of concurrency is relatively large, the queue corresponding to the listening socket will soon be full (the completed connection queue has a maximum length). This is the simplest mode, and although it is certainly not used in the design of the server, it gives us an idea of the overall process of a server handling requests.

1.4.2 Multi-process mode

In serial mode, all requests are queued and processed in one process, which is the reason for inefficiency. At this time, we can divide the request to multiple processes to improve efficiency, because in the serial processing mode, if there is a blocking IO operation, it will block the main process, thereby blocking the processing of subsequent requests. In the multi-process mode, if a request blocks a process, the operating system will suspend the process, and then schedule other processes to execute, so that other processes can perform new tasks. There are several types in multi-process mode.

-

The main process accepts, the child process processes the request

In this mode, the main process is responsible for extracting the node that has completed the connection, and then handing over the request corresponding to this node to the child process for processing. The logic is as follows.

while(1) {

const socketForCommunication = accept(socket);

if (fork() > 0) {

continue;

// parent process } else {

// child process handle(socketForCommunication);

}

}

In this mode, every time a request comes, a new process will be created to handle it. This mode is slightly better than serial. Each request is processed independently. Assuming that a request is blocked in file IO, it will not affect the processing of b request, and it is as concurrent as possible. Its bottleneck is that the number of processes in the system is limited. If there are a large number of requests, the system cannot handle it. Furthermore, the overhead of the process is very large, which is a heavy burden for the system.

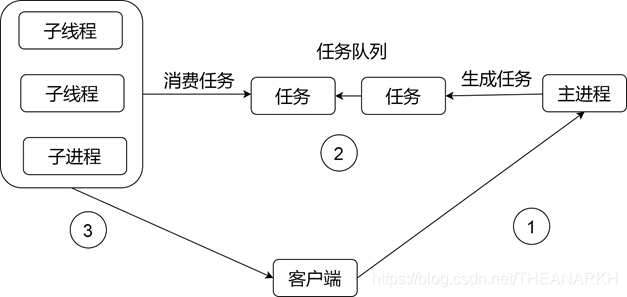

- Process Pool Mode Creating and destroying processes in real time is expensive and inefficient, so the process pool mode is derived. The process pool mode is to create a certain number of processes in advance when the server starts, but these processes are worker processes. It is not responsible for accepting requests. It is only responsible for processing requests. The main process is responsible for accept, and it hands the socket returned by accept to the worker process for processing, as shown in the figure below.

However, compared with the mode in 1, the process pool mode is relatively complicated, because in mode 1, when the main process receives a request, it will fork a child process in real time. At this time, the child process will inherit the new request in the main process. The corresponding fd, so it can directly process the request corresponding to the fd.

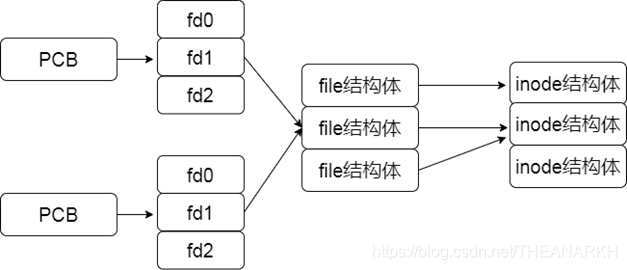

In the process pool mode, the child process is created in advance. When the main process receives a request, the child process cannot get the fd corresponding to the request. of. At this time, the main process needs to use the technique of passing file descriptors to pass the fd corresponding to this request to the child process. A process is actually a structure task_struct. In JS, we can say it is an object. It has a field that records the open file descriptor. When we access a file descriptor, the operating system will be based on the value of fd.

Find the underlying resource corresponding to fd from task_struct, so when the main process passes the file descriptor to the child process, it passes more than just a number fd, because if you only do this, the fd may not correspond to any resources in the child process, Or the corresponding resources are inconsistent with those in the main process. The operating system does a lot of things for us. Let us access the correct resource through fd in the child process, that is, the request received in the main process.

- child process accept

This mode is not to wait until the request comes and then create the process. Instead, when the server starts, multiple processes are created. Then multiple processes call accept respectively. The architecture of this mode is shown in Figure 1-8.

const socketfd = socket (configuration such as protocol type);

bind(socketfd, listening address)

for (let i = 0 ; i < number of processes; i++) {

if (fork() > 0) {

// The parent process is responsible for monitoring the child process} else {

// The child process handles the request listen(socketfd);

while(1) {

const socketForCommunication = accept(socketfd);

handle(socketForCommunication);

}

}

}

In this mode, multiple child processes are blocked in accept. If a request arrives at this time, all child processes will be woken up, but the child process that is scheduled first will take off the request node first. After the subsequent process is woken up, it may encounter that there is no request to process, and enter again. Sleep, the process is woken up ineffectively, which is the famous phenomenon of shocking herd. The improvement method is to add a lock before accept, and only the process that gets the lock can accept, which ensures that only one process will be blocked in accept. Nginx solves this problem, but the new version of the operating system has solved this problem at the kernel level. Only one process will be woken up at a time. Usually this pattern is used in conjunction with event-driven.

1.4.3 Multi-threading mode

Multi-threading mode is similar to multi-process mode, and it is also divided into the following

types:

- main process accept

- create sub-thread processing

- sub-thread accept

- The first two thread pools are the same as in the multi-process mode, but the third one is special, and we mainly introduce the third one. In the subprocess mode, each subprocess has its own task_struct, which means that after fork, each process is responsible for maintaining its own data, while the thread is different. The thread shares the data of the main thread (main process). , when the main process gets an fd from accept, if it is passed to the thread, the thread can operate directly. So in the thread pool mode, the architecture is shown in the following figure.

The main process is responsible for accepting the request, and then inserting a task into the shared queue through mutual exclusion. The child threads in the thread pool also extract nodes from the shared queue for processing through mutual exclusion.

1.4.4 Event-driven

Many servers (Nginx, Node.js, Redis) are now designed using the event-driven pattern. We know from previous design patterns that in order to handle a large number of requests, the server needs a large number of processes/threads. This is a very large overhead.

The event-driven mode is generally used with a single process (single thread). But because it is a single process, it is not suitable for CPU intensive, because if a task keeps occupying the CPU, subsequent tasks cannot be executed. It is more suitable for IO-intensive (generally provides a thread pool, responsible for processing CPU or blocking tasks). When using the multi-process/thread mode, a process/thread cannot occupy the CPU all the time.

After a certain period of time, the operating system will perform task scheduling. Let other threads also have the opportunity to execute, so that the previous tasks will not block the later tasks and starvation will occur. Most operating systems provide event-driven APIs. But event-driven is implemented differently in different systems. So there is usually a layer of abstraction to smooth out this difference. Here is an example of Linux epoll.

// create an epoll

var epollFD = epoll_create();

/*

Register an event of interest for a file descriptor in epoll, here is the listening socket, register a readable event, that is, the connection is coming event = {

event: readable fd:

Libuv data structure and general logic

2.1 Core structure uv_loop_s

uv_loop_s is the core data structure of Libuv, and each event loop corresponds to a uv_loop_s structure. It records core data throughout the event loop. Let's analyze the meaning of each field.

1 Field void* data of user-defined data;

2 The number of active handles, which will affect the use of the loop to exit unsigned int active_handles;

3 handle queue, including active and inactive void* handle_queue[2];

The number of 4 requests will affect the exit of the event loop union { void* unused[2]; unsigned int count; } active_reqs;

5 The flag for the end of the event loop unsigned int stop_flag;

6 Some flags run by Libuv, currently only UV_LOOP_BLOCK_SIGPROF, mainly used to block the SIGPROF signal when epoll_wait, improve performance, SIGPROF is a signal unsigned long flags triggered by the setting of the operating system settimer function;

7 fd of epoll

int backend_fd;

8 pending stage queue void* pending_queue[2];

9 points to the uv__io_t structure queue that needs to register events in epoll void* watcher_queue[2];

10 There is an fd field in the node of the watcher_queue queue, watchers use fd as the index, record the uv__io_t structure uv__io_t** watchers where fd is located;

11 The number of watchers related, set unsigned int nwatchers in maybe_resize function;

12 The number of fds in watchers, generally the number of nodes in the watcher_queue queue unsigned int nfds;

13 After the child thread of the thread pool processes the task, insert the corresponding structure into the wq queue void* wq[2];

14 Control the mutually exclusive access of the wq queue, otherwise there will be problems with simultaneous access by multiple child threads uv_mutex_t wq_mutex;

15 for the sub-thread of the thread pool and the main thread to communicate uv_async_t wq_async;

16 Mutex variable for read-write lock uv_rwlock_t cloexec_lock;

17 Queue in the close phase of the event loop, generated by uv_close uv_handle_t* closing_handles;

18 Process queue from fork void* process_handles[2];

19 Task queue corresponding to the prepare phase of the event loop void* prepare_handles[2];

20 Task queue corresponding to the check phase of the event loop void* check_handles[2];

21 The task queue corresponding to the idle phase of the event loop void* idle_handles[2];

21 async_handles queue, the Poll IO stage executes uv_async_io to traverse the async_handles queue to process the node with pending 1 void* async_handles[2];

22 is used to monitor whether there is an async handle task that needs to be processed uv__io_t async_io_watcher;

23 The write side fd used to save the communication between the child thread and the main thread

int async_wfd;

24 Save the timer binary heap structure struct {

void* min;

unsigned int nelts;

} timer_heap;

25 Manage the id of the timer node, and continuously superimpose uint64_t timer_counter;

26 At the current time, Libuv will update the current time at the beginning of each event loop and in the Poll IO stage, and then use it in subsequent stages to reduce the uint64_t time for system calls;

27 The pipeline used for the communication between the forked process and the main process, used to notify the main process when the child process receives a signal, and then the main process executes the callback registered by the child process node int signal_pipefd[2];

28 Similar to async_io_watcher, signal_io_watcher saves the pipeline read end fd and callback, and then registers it in epoll. When the child process receives the signal, it writes to the pipeline through write, and finally executes the callback uv_io_t signal_io_watcher in the Poll IO stage;

29 handle used to manage the exit signal of the child process

uv_signal_t child_watcher;

30 spare fd

int emfile_fd;

2.2 uv_handle_t

In Libuv, uv_handle_t is similar to the base class in C++, and many subclasses inherit from it. Libuv mainly obtains the effect of inheritance by controlling the layout of memory. handle represents an object with a long life cycle. E.g 1 An active prepare handle whose callback will be executed each time the event loops.

2 A TCP handle executes its callback every time a connection arrives.

Let's take a look at uv_handle_t Definition of

1 Custom data, used to associate some contexts, used in Node.js to associate the C++ object void\* data to which handle belongs;

2 belongs to the event loop uv_loop_t\* loop;

3 handle type uv_handle_type type;

4 After the handle calls uv_close, the callback uv_close_cb that is executed in the closing phase close_cb;

5 The front and rear pointers used to organize the handle queue void\* handle_queue[2];

6 file descriptor union {

int fd;

void\* reserved[4];

} u;

7 When the handle is in the close queue, this field points to the next close node uv_handle_t\* next_closing;

8 handle status and flag unsigned int flags;

2.2.1 uv_stream_s

uv_stream_s is a structure representing a stream. In addition to inheriting the fields of uv_handle_t, it additionally defines the following fields

1 The number of bytes waiting to be sent size_t write_queue_size;

2 Function to allocate memory uv_alloc_cb alloc_cb;

3 Callback uv_read_cb read_cb executed when reading data is successful;

4 Initiate the structure corresponding to the connection uv_connect_t \*connect_req;

5 Close the structure uv_shutdown_t \*shutdown_req corresponding to the write end;

6 Used to insert epoll, register read and write events uv\_\_io_t io_watcher;

7 queue to be sent void\* write_queue[2];

8 Send completed queue void\* write_completed_queue[2];

9 Callback uv_connection_cb connection_cb executed when connection is received;

10 Error code for socket operation failure int delayed_error;

11 fd returned by accept

int accepted_fd;

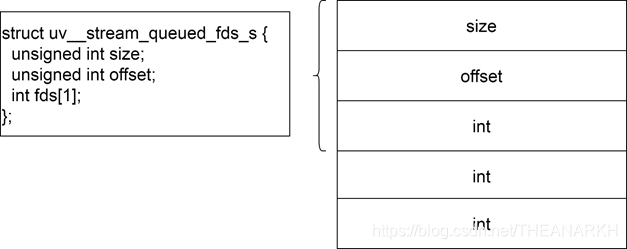

12 An fd has been accepted, and there is a new fd, temporarily stored void\* queued_fds;

2.2.2 uv_async_s

uv_async_s is a structure that implements asynchronous communication in Libuv. Inherited from uv_handle_t and additionally defines the following fields.

1 Callback uv_async_cb executed when an asynchronous event is triggered async_cb;

2 is used to insert the async-handles queue void* queue[2];

3 The node pending field in the async_handles queue is 1, indicating that the corresponding event has triggered int pending;

2.2.3 uv_tcp_s

uv_tcp_s inherits uv_handle_s and uv_stream_s.

2.2.4 uv_udp_s

1 send bytes size_t send_queue_size;

2 The number of write queue nodes size_t send_queue_count;

3 Allocate the memory for receiving data uv_alloc_cb alloc_cb;

4 Callback uv_udp_recv_cb recv_cb executed after receiving data;

5 Insert the IO watcher in epoll to realize data read and write uv__io_t io_watcher;

6 queue to be sent void* write_queue[2];

7 Send the completed queue (success or failure to send), related to the queue to be sent void* write_completed_queue[2];

2.2.5 uv_tty_s

uv_tty_s inherits from uv_handle_t and uv_stream_t. The following fields are additionally defined.

1 The parameters of the terminal struct termios orig_termios;

2 The working mode of the terminal int mode;

2.2.6 uv_pipe_s

uv_pipe_s inherits from uv_handle_t and uv_stream_t. The following fields are additionally defined.

1 marks whether the pipe can be used to pass the file descriptor int ipc;

2 File path for Unix domain communication const char* pipe_fname;

2.2.7 uv_prepare_s, uv_check_s, uv_idle_s

The above three structure definitions are similar, they all inherit uv_handle_t and define two additional fields.

1 prepare, check, idle stage callback uv_xxx_cb xxx_cb;

2 is used to insert prepare, check, idle queue void* queue[2];

2.2.8 uv_timer_s

uv_timer_s inherits uv_handle_t and additionally defines the following fields.

1 timeout callback uv_timer_cb timer_cb;

2 Insert the field of the binary heap void* heap_node[3];

3 timeout uint64_t timeout;

4 Whether to continue to re-time after the timeout, if so, re-insert the binary heap uint64_t repeat;

5 id mark, used to compare uint64_t start_id when inserting binary heap

2.2.9 uv_process_s

uv_process_s inherits uv_handle_t and additionally defines

1 Callback executed when the process exits uv_exit_cb exit_cb;

2 process id

int pid;

3 for inserting queues, process queues or pending queues void\* queue[2];

4 Exit code, set int status when the process exits;

2.2.10 uv_fs_event_s

uv_fs_event_s is used to monitor file changes. uv_fs_event_s inherits uv_handle_t and additionally defines

1 Monitored file path (file or directory)

char\* path;

2 The callback uv_fs_event_cb cb executed when the file changes;

2.2.11 uv_fs_poll_s

uv_fs_poll_s inherits uv_handle_t and additionally defines

1 poll_ctx points to poll_ctx structure void\* poll_ctx;

struct poll*ctx {

// corresponding handle

uv_fs_poll_t* parent_handle;

// Mark whether to start polling and the reason for failure when polling int busy_polling;

// How often to check if the file content has changed unsigned int interval;

// The start time of each round of polling uint64_t start_time;

// belongs to the event loop uv_loop_t* loop;

// Callback when the file changes uv_fs_poll_cb poll_cb;

// Timer for polling uv_timer_t timer_handle after timing timeout;

// Record the context information of polling, file path, callback, etc. uv_fs_t fs_req;

// Save the file information returned by the operating system when polling uv_stat_t statbuf;

// The monitored file path, the string value is appended to the structure char path[1]; /* variable length \_/

};

2.2.12 uv_poll_s

uv_poll_s inherits from uv_handle_t and additionally defines the following fields.

1 Callback uv_poll_cb poll_cb executed when the monitored fd has an event of interest;

2 Save the IO watcher of fd and callback and register it in epoll uv__io_t io_watcher;

2.1.13 uv_signal_s

uv_signal_s inherits uv_handle_t and additionally defines the following fields

1 Callback uv_signal_cb signal_cb when a signal is received;

2 registered signal int signum;

3 It is used to insert the red-black tree. The process encapsulates the signals and callbacks of interest into uv_signal_s, and then inserts it into the red-black tree. When the signal arrives, the process writes the notification to the pipeline in the signal processing number to notify Libuv. Libuv will execute the callback corresponding to the process in the Poll IO stage. The definition of a red-black tree node is as follows struct {

struct uv_signal_s* rbe_left;

struct uv_signal_s* rbe_right;

struct uv_signal_s\* rbe_parent;

int rbe_color;

} tree_entry;

4 Number of received signals unsigned int caught_signals;

5 Number of processed signals unsigned int dispatched_signals;

2.3 uv_req_s

Send the callback for execution (success or failure) uv_udp_send_cb send_cb;

### 2.3.5 uv_getaddrinfo_s

uv_getaddrinfo_s represents a DNS request to query IP through domain name, additionally defined field

```cpp

1 belongs to the event loop uv_loop_t\* loop;

2 Node struct uv\_\_work work_req for inserting into the thread pool task queue during asynchronous DNS resolution;

3 Callback uv_getaddrinfo_cb cb executed after DNS resolution;

4 DNS query configuration struct addrinfo* hints;

char* hostname;

char\* service;

5 DNS resolution result struct addrinfo\* addrinfo;

6 DNS resolution return code int retcode;

2.3.6 uv_getnameinfo_s

uv_getnameinfo_s represents a DNS query request to query the domain name through IP, and the additionally defined field

1 belongs to the event loop uv_loop_t\* loop;

2 Node struct uv\_\_work work_req for inserting into the thread pool task queue during asynchronous DNS resolution;

3 Callback for socket transfer domain name completion uv_getnameinfo_cb getnameinfo_cb;

4 The socket structure struct sockaddr_storage storage that needs to be transferred to the domain name;

5 Indicates the information returned by the query int flags;

6 Query the returned information char host[NI_MAXHOST];

char service[NI_MAXSERV];

7 Query return code int retcode;

2.3.7 uv_work_s

uv_work_s is used to submit tasks to the thread pool, additionally defined fields

1 belongs to the event loop uv_loop_t\* loop;

2 Function uv_work_cb work_cb for processing tasks;

3 The function uv_after_work_cb after_work_cb executed after the task is processed;

4 Encapsulate a work and insert it into the thread pool queue. The work and done functions of work_req are the encapsulation of the above work_cb and after_work_cb struct uv\_\_work work_req;

uv_fs_s

uv_fs_s represents a file operation request, additionally defined fields

1 file operation type uv_fs_type fs_type;

2 belongs to the event loop uv_loop_t\* loop;

3 Callback uv_fs_cb cb for file operation completion;

4 Return code of file operation ssize_t result;

5 Data returned by file operation void\* ptr;

6 File operation path const char\* path;

7 stat information of the file uv_stat_t statbuf;

8 When the file operation involves two paths, save the destination path const char \*new_path;

9 file descriptor uv_file file;

10 file flags int flags;

11 Operation mode mode_t mode;

12 The data and number passed in when writing the file unsigned int nbufs;

uv_buf_t\* bufs;

13 file offset off_t off;

14 Save the uid and gid that need to be set, such as uv_uid_t uid when chmod;

uv_gid_t gid;

15 Save the file modification and access time that need to be set, such as double atime when fs.utimes;

double mtime;

16 When asynchronous, it is used to insert the task queue, save the work function, and call back the function struct uv\_\_work work_req;

17 Save the read data or length. e.g. read and sendfile

uv_buf_t bufsml[4];

2.4 IO Observer

IO observer is the core concept and data structure in Libuv. Let's take a look at its definition

1 struct uv\_\_io_s {

2 // Callback after the event is triggered 3. uv\_\_io_cb cb;

3 // Used to insert the queue 5. void\* pending_queue[2];

4 void\* watcher_queue[2];

5 // Save the event of interest this time and set it when inserting the IO observer queue 8. unsigned int pevents;

6 // Save the current events of interest 10. unsigned int events;

7 int fd;

8 };

The IO observer encapsulates the file descriptor, events and callbacks, and then inserts it into the IO observer queue maintained by the loop. In the Poll IO stage, Libuv will register the file descriptor with the underlying event-driven module according to the information described by the IO observer. events of interest. When the registered event is triggered, the callback of the IO observer will be executed. Let's look at some logic of how to start the IO observer.

2.4.1 Initialize IO observer

1 void uv**io_init(uv**io_t\* w, uv\_\_io_cb cb, int fd) {

2 // Initialize the queue, callback, fd that needs to be monitored

3 QUEUE_INIT(&w->pending_queue);

4 QUEUE_INIT(&w->watcher_queue);

5 w->cb = cb;

6 w->fd = fd;

7 // Events of interest when epoll was added last time, set 8. w->events = 0;

8 // Currently interested events, set 10. w->pevents = 0 before executing the epoll function again

9 }

2.4.2 Register an IO observer to Libuv.

1. void uv__io_start(uv_loop_t* loop, uv__io_t* w, unsigned int events) {

2. // Set the current events of interest 3. w->pevents |= events;

4. // May need to expand 5. maybe_resize(loop, w->fd + 1);

6. // If the event has not changed, return directly 7. if (w->events == w->pevents)

7. if ((unsigned) w->fd >= loop->nwatchers)

8. return;

9. // If the IO watcher is not mounted elsewhere, insert it into Libuv's IO watcher queue 10. if (QUEUE_EMPTY(&w->watcher_queue))

11. QUEUE_INSERT_TAIL(&loop->watcher_queue, &w->watcher_queue);

12. // Save the mapping relationship 13. if (loop->watchers[w->fd] == NULL) {

14. loop->watchers[w->fd] = w;

15. loop->nfds++;

16. }

The uv__io_start function is to insert an IO observer into the observer queue of Libuv, and save a mapping relationship in the watchers array. Libuv will process the IO observer queue during the Poll IO phase.

2.4.3 Cancel the IO observer or the event uv

__io_stop to modify the events that the IO observer is interested in. If there are still interesting events, the IO observer will still be in the queue, otherwise it will be removed from

1. void uv\_\_io_stop(uv_loop_t\* loop,

2. uv\_\_io_t\* w,

3. unsigned int events) {

4. if (w->fd == -1)

5. return;

6. assert(w->fd >= 0);

7. if ((unsigned) w->fd >= loop->nwatchers)

8. return;

9. // Clear the previously registered events and save them in pevents, indicating the currently interesting events 10. w->pevents &= ~events;

10. // Not interested in all events 12. if (w->pevents == 0) {

11. // Remove the IO watcher queue 14. QUEUE_REMOVE(&w->watcher_queue);

12. // reset 16. QUEUE_INIT(&w->watcher_queue);

mark, and record the number of active handles plus one. Only handles in REF and ACTIVE state will affect the exit of the event loop.

2.5.4 uv__req_init

uv__req_init initializes the type of request and records the number of requests, which will affect the exit of the event loop.

1. #define uv__req_init(loop, req, typ)

2. do {

3. (req)->type = (typ);

4. (loop)->active_reqs.count++;

5. }

6. while (0)

2.5.5. uv__req_register

The number of requests plus one

1. #define uv\_\_req_register(loop, req)

2. do {

3. (loop)->active_reqs.count++;

4. }

5. while (0)

2.5.6. uv__req_unregister

The number of requests minus one

1. #define uv__req_unregister(loop, req)

2. do {

3. assert(uv__has_active_reqs(loop));

4. (loop)->active_reqs.count--;

5. }

6. while (0)

2.5.7. uv__handle_ref

uv__handle_ref marks the handle as the REF state. If the handle is in the ACTIVE state, the number of active handles is increased by one

1. #define uv\_\_handle_ref(h)

2. do {

3. if (((h)->flags & UV_HANDLE_REF) != 0) break;

4. (h)->flags |= UV_HANDLE_REF;

5. if (((h)->flags & UV_HANDLE_CLOSING) != 0) break;

6. if (((h)->flags & UV_HANDLE_ACTIVE) != 0) uv\_\_active_handle_add(h);

7. }

8. while (0)

9. uv\_\_handle_unref

uv__handle_unref removes the REF state of the handle. If the handle is in the ACTIVE state, the number of active handles is reduced by one

1. #define uv\_\_handle_unref(h)

2. do {

3. if (((h)->flags & UV_HANDLE_REF) == 0) break;

4. (h)->flags &= ~UV_HANDLE_REF;

5. if (((h)->flags & UV_HANDLE_CLOSING) != 0) break;

6. if (((h)->flags & UV_HANDLE_ACTIVE) != 0) uv\_\_active_handle_rm(h);

7. }

8. while (0)

Event Loop

Node.js belongs to the single-threaded event loop architecture. The event loop is implemented by the uv_run function of Libuv. The while loop is executed in this function, and then the event callbacks of each phase are continuously processed.

The processing of the event loop is equivalent to a consumer, consuming tasks generated by various codes. After Node.js is initialized, it begins to fall into the event loop, and the end of the event loop also means the end of Node.js. Let's take a look at the core code of the event loop.

int uv_run(uv_loop_t* loop, uv_run_mode mode) {

int timeout;

int r;

int ran_pending;

// Submit the task to loop before uv_run

r = uv__loop_alive(loop);

// The event loop has no tasks to execute and is about to exit. Set the time of the current loop if (!r)

uv__update_time(loop);

// Exit the event loop if there is no task to process or uv_stop is called while (r != 0 && loop->stop_flag == 0) {

// Update the time field of loop uv__update_time(loop);

// Execute timeout callback uv__run_timers(loop);

/*

Execute the pending callback, ran_pending represents whether the pending queue is empty,

i.e. no node can execute */

ran_pending = uv__run_pending(loop);

// Continue to execute various queues uv__run_idle(loop);

uv__run_prepare(loop);

timeout = 0;

/*

When the execution mode is UV_RUN_ONCE, if there is no pending node,

Only blocking Poll IO, the default mode is also */

if ((mode == UV_RUN_ONCE && !ran_pending) ||

mode == UV_RUN_DEFAULT)

timeout = uv_backend_timeout(loop);

// Poll IO timeout is the timeout of epoll_wait uv__io_poll(loop, timeout);

// Process the check phase uv__run_check(loop);

// handle the close phase uv__run_closing_handles(loop);

/*

There is also a chance to execute the timeout callback, because uv__io_poll may return because the timer expired.

*/

if (mode == UV_RUN_ONCE) {

uv__update_time(loop);

uv__run_timers(loop);

}

r = uv__loop_alive(loop);

/*

Execute only once, exit the loop, UV_RUN_NOWAIT means that it will not block in the Poll IO stage and the loop will only execute once */

if (mode == UV_RUN_ONCE || mode == UV_RUN_NOWAIT)

break;

}

// exited because uv_stop was called, reset the flag

if (loop->stop_flag != 0)

loop->stop_flag = 0;

/*

Returns whether there are still active tasks (handle or request),

The agent can execute uv_run again

*/

return r;

}

Libuv is divided into several stages. The following is from first to last, and the relevant codes of each stage are analyzed separately.

3.1 Event loop timer In Libuv

the timer stage is the first stage to be processed. The timer is implemented as a min heap, and the node that expires the fastest is the root node. Libuv caches the current time at the beginning of each event loop.

In each round of the event loop, the cached time is used. When necessary, Libuv will explicitly update this time, because the operation needs to be called to obtain the time. The interface provided by the system, and frequently calling the system call will bring a certain amount of time. The cache time can reduce the calls of the operating system and improve the performance.

After Libuv caches the current latest time, it executes uv__run_timers, which traverses the minimum heap and finds the current timeout node. Because the nature of the heap is that the parent node is definitely smaller than the child. And the root node is the smallest, so if a root node, it doesn't time out, the following nodes also don't time out. For the node that times out, its callback is executed. Let's look at the specific logic.

void uv__run_timers(uv_loop_t* loop) {

struct heap_node* heap_node;

uv_timer_t* handle;

// Traverse the binary heap for (;;) {

// Find the smallest node heap_node = heap_min(timer_heap(loop));

// if not exit if (heap_node == NULL)

break;

// Find the first address of the structure through the structure field handle = container_of(heap_node, uv_timer_t, heap_node);

// The smallest node does not have a supermarket, and the subsequent nodes will not time out if (handle->timeout > loop->time)

break;

// delete the node uv_timer_stop(handle);

/*

Retry inserting into the binary heap, if necessary (repeat is set, such as setInterval)

*/

uv_timer_again(handle);

// Execute callback handle->timer_cb(handle);

}

}

After executing the callback, there are two key operations, the first is stop, and the second is again. The logic of stop is very simple, that is, delete the handle from the binary heap and modify the state of the handle.

So what is again? again is to support the scenario of setInterval. If the handle is set with the repeat flag, the handle will continue to execute the timeout callback after every repeat time after the handle times out. For setInterval, the timeout is x, and the callback is executed after every x time.

This is the underlying principle of timers in Node.js. But Node.js does not insert a node into the min heap every time setTimeout/setInterval is adjusted. In Node.js, there is only one handle about uv_timer_s, which maintains a data structure in the JS layer, and calculates the earliest expiration every time. node, and then modify the timeout time of the handle, which is explained in the timer chapter.

In addition, the timer stage is also related to the Poll IO stage, because Poll IO may cause the main thread to block. In order to ensure that the main thread can execute the timer callback as soon as possible, Poll IO cannot block all the time, so at this time, the blocking time is the fastest The duration of the timer node of the period (for details, please refer to the uv_backend_timeout function in libuv core.c).

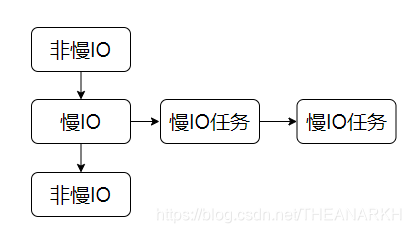

3.2 pending stage

The official website's explanation of the pending stage is that the IO callbacks that were not executed in the Poll IO stage of the previous round will be executed in the pending stage of the next round of loops.

From the source code point of view, when processing tasks in the Poll IO stage, in some cases, if the currently executed operation fails, a callback function needs to be executed to notify the caller of some information.

The callback function will not be executed immediately, but will be pending in the next round of the event loop. Stage execution (such as successful writing of data, or callback to the C++ layer when the TCP connection fails), let's first look at the processing of the pending stage.

static int uv__run_pending(uv_loop_t* loop) {

QUEUE* q;

QUEUE pq;

uv__io_t* w;

if (QUEUE_EMPTY(&loop->pending_queue))

return 0;

// Move the node of the pending_queue queue to pq, that is, clear the pending_queue

QUEUE_MOVE(&loop->pending_queue, &pq);

// Traverse the pq queue while (!QUEUE_EMPTY(&pq)) {

// Take out the current first node to be processed, ie pq.next

q = QUEUE_HEAD(&pq);

// Remove the current node to be processed from the queue QUEUE_REMOVE(q);

/*

Reset the prev and next pointers, because at this time these two pointers point to the two nodes in the queue */

QUEUE_INIT(q);

w = QUEUE_DATA(q, uv__io_t, pending_queue);

w->cb(loop, w, POLLOUT);

}

return 1;

}

The processing logic of the pending phase is to execute the nodes in the pending queue one by one. Let's take a look at how the nodes of the pending queue are produced.

void uv__io_feed(uv_loop_t* loop, uv__io_t* w) {

if (QUEUE_EMPTY(&w->pending_queue))

QUEUE_INSERT_TAIL(&loop->pending_queue, &w->pending_queue);

}

Libuv generates pending tasks through the uvio_feed function. From the Libuv code, we will call this function when we see IO errors (such as the uvtcp_connect function of tcp.c).

if (handle->delayed_error)

uv__io_feed(handle->loop, &handle->io_watcher);

After the data is written successfully (such as TCP, UDP), a node is also inserted into the pending queue, waiting for a callback. For example, the code executed after sending data successfully (uv__udp_sendmsg function of udp.c)

// Move out of the write queue after sending QUEUE_REMOVE(&req->queue);

// Join the write completion queue QUEUE_INSERT_TAIL(&handle->write_completed_queue, &req->queue);

/*

After some node data is written, insert the IO observer into the pending queue,

Execute callback in pending stage */

uv__io_feed(handle->loop, &handle->io_watcher);

When the IO is finally closed (such as closing a TCP connection), the corresponding node will be removed from the pending queue. Because it has been closed, naturally there is no need to execute the callback.

void uv__io_close(uv_loop_t* loop, uv__io_t* w) {

uv__io_stop(loop,

w,

POLLIN | POLLOUT | UV__POLLRDHUP | UV__POLLPRI);

QUEUE_REMOVE(&w->pending_queue);

}

3.3 prepare, check, idle of event loop

prepare, check, and idle are relatively simple stages in the Libuv event loop, and their implementations are the same (see loop-watcher.c). This section only explains the prepare stage. We know that Libuv is divided into handle and request, and the tasks of the prepare stage belong to the handle type. This means that nodes in the prepare phase are executed every time the event loop occurs unless we explicitly remove them. Let's first see how to use it.

void prep_cb(uv_prepare_t *handle) {

printf("Prep callback\n");

}

int main() {

uv_prepare_t prep;

// Initialize a handle, uv_default_loop is the core structure of the event loop uv_prepare_init(uv_default_loop(), &prep);

// Register handle callback uv_prepare_start(&prep, prep_cb);

// Start the event loop uv_run(uv_default_loop(), UV_RUN_DEFAULT);

return 0;

}

When the main function is executed, Libuv will execute the callback prep_cb in the prepare phase. Let's analyze this process.

int uv_prepare_init(uv_loop_t* loop, uv_prepare_t* handle) {

uv__handle_init(loop, (uv_handle_t*)handle, UV_PREPARE);

handle->prepare_cb = NULL;

returnThe node is removed from the current queue QUEUE_REMOVE(q);

// Reinsert the original queue QUEUE_INSERT_TAIL(&loop->prepare_handles, q);

// Execute the callback function h->prepare_cb(h);

}

}

The logic of the uv__run_prepare function is very simple, but one key point is that after each node is executed, Libuv will re-insert the node into the queue, so the nodes in the prepare (including idle, check) stage will be executed in each round of the event loop. implement. Nodes such as timer, pending, and closing stages are one-time and will be removed from the queue after being executed. Let's review the test code at the beginning.

Because it sets the operating mode of Libuv to be the default mode. The prepare queue always has a handle node, so it will not exit. It will always execute the callback. So what if we want to quit? Or do not execute a node of the prepare queue. We just need to stop it once.

int uv_prepare_stop(uv_prepare_t* handle) {

if (!uv__is_active(handle)) return 0;

// Remove the handle from the prepare queue, but also mount it in handle_queue QUEUE_REMOVE(&handle->queue);

// Clear the active flag bit and subtract the active number of handles in the loop uv__handle_stop(handle);

return 0;

}

The stop function and the start function have opposite functions, which is the principle of the prepare, check, and idle phases in Node.js.

3.4 Poll IO of event loop